- Introduction

- Markov Analysis is a way of analysing the current movement of some variable in an effort to forecast its future movement. As a management tool, Markov analysis has been used during the last several years, mainly as a marketing aid for examining and predicting the behaviour of customers from the standpoint of the loyalty to one brand and their switching patterns to other brands.

- In the field of accounting, it can be applied to the behaviour of accounts receivable.

- Markov Processes

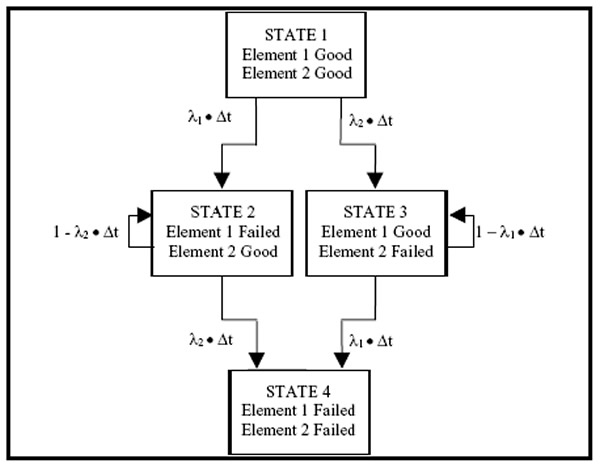

- Markov processes (chain or sequence of events) is a stochastic process which is used to analyse decision problems in which the occurrence of a specific event depends on the occurrence of the event immediately prior to the current event. Basically, Markov process helps us to identify

i. A specific state of the system being studied, and

ii. The state-transition relationship

- State and Transition Probabilities

- The occurrence of an event at a specified point in time (say, period n) puts the system in a given state, say En. If, after the passage of one time unit, another event occurs (during time period n+1), the system has moved from state En to state En+1.

- For example, Et may represent the number of persons in the queue at a Mother Dairy booth at time t. As time changes to t+1, the state of the queue also changes to Et+1. The probability of moving from one state to another or remain in the same state in a single time period is called transition probability. Also, since the probability of moving from one state to another depends on the probability of the preceding state, transition probability is called conditional probability.

- From the point of view of marketing research, it is assumed that the number of states (brands) are finite and the decision of change of brand is taken periodically, so that such changes will occur over a period of time. In general let,

i. Ei = finite number of possible outcomes (i =1, 2, ….., m) of each of the sequence of events (or experiments).

ii. pij = conditional probability of outcome Ej for any particular event (experiment) given that outcome Ei occurred for the immediately preceding extent (experiment).

- The outcomes E1, E2, ….., Em are called states and rhe numbers pij are called transition probabilities.

- Characteristics of a Markov Process

- The Markov chain analysis is based on the following assumptions:

i. The given system has a finite number of states, none of which “absorbing” in nature (a state is said to be absorbing if a customer would never switch to a particular brand).

ii. The states are both collectively exhaustive and mutually exclusive.

iii. The probability of moving from one state to another depends only on the immediately preceding state.

iv. Transition probabilities are stationary, i.e., they are constant.

v. The process has a set of initial probabilities which may be given or determined.

vi. The transition probabilities of moving to alternative states in the next time period, given a state in the current time period must sum to unity.

Click here for government certification in Quality

Vaibhav MiglaniVaibhav Miglani, an aspirant of knowledge in statistics is currently pursuing his "B.Sc(H) in Statistics" from "Ramanujan College, University of Delhi". He wants to become one of the greatest minds in the field of statistics.

5 Comments. Leave new

Good effort!

Well written..

Excellent work Vaibhav 🙂

Well Written 😀

Well explained!