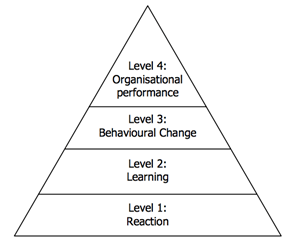

The Kirkpatrick Model

The Kirkpatrick Model is probably the best known model for analyzing and evaluating the results of training and educational programs. It takes into account any style of training, both informal and formal, to determine aptitude based on four levels criteria.

This model was developed by Dr. Donald Kirkpatrick (1924 – 2014) in the 1950s. The model can be implemented before, throughout, and following training to show the value of training to the business.

As outlined by this system, evaluation needs to start with level one, after which as time and resources will allow, should proceed in order through levels two, three, and four. Data from all of the previous levels can be used as a foundation for the following levels’ analysis. As a result, each subsequent level provides an even more accurate measurement of the usefulness of the training course, yet simultaneously calls for a significantly more time-consuming and demanding evaluation.

Undoubtedly, the most widely used and in-demand method for the assessment of training in businesses nowadays is Kirkpatrick’s system based around the four levels as guidelines. The Kirkpatrick model has been used for over 30 years by many different types of companies as the major system for training evaluations. It is evident that Kirkpatrick’s vision has made a positive impact to the overall practice of training evaluation.

Four levels of the Kirkpatrick Model

Level 1 Evaluation – Reaction

The primary focus of the this level is to understand –

- In what ways participants liked a particular program / training?

- How participants feel after going through the process of learning?

The objective for this level is straightforward; it evaluates how individuals react to the training model by asking questions that establishes the trainees’ thoughts. Questions will figure out if the participant enjoyed their experience and if they found the material in the program useful for their work. This particular form of evaluation is typically referred to as a “smile sheet.”

As outlined by Kirkpatrick, each program needs to be assessed at this level to help improve the model for future use. On top of that, the participants’ responses is essential for determining how invested they will be in learning the next level. Even though an optimistic reaction does not ensure learning, an unfavorable one definitely makes it less likely that the user will pay attention to the training.

Examples of resources and techniques for level one –

- Online assessment that can be graded by delegates/evaluators.

- Interviews

- Can be done immediately after the training ends.

- Are the participants happy with the instructor(s)?

- Did the training meet the participant’s needs?

- Are the attendee’s happy with the educational tools employed (e.g., PowerPoint, handouts etc)

- Printed or oral reports provided by delegates/evaluators to supervisors at the participants’ organizations.

- “Smile sheets”.

- Comment forms determined by subjective individual reaction to the training course.

- Post-training program questionnaires.

- Verbal responses that can be taken into consideration and considered.

- Especially encourage written comments

The only thing that needs to be ensured is to get honest responses and feedbacks.

Level 2 Evaluation – Learning

The primary focus of this level involves understanding the learning from the session such as –

- New skills / knowledge / attitudes?

- What was learned?

- What was not learned?

Evaluating at this level is meant to gauge the level participants have developed in expertise, knowledge, or mindset. Exploration at this level is far more challenging and time-consuming compared to level one.

Techniques vary from informal to formal tests and self-assessment to team assessment. If at all possible, individuals take the test or evaluation prior to the training (pre-test) and following training (post-test) to figure out how much the participant comprehended.

Examples of tools and procedures for level two of evaluation –

- Measurement and evaluation is simple and straightforward for any group size.

- You may use a control group to compare.

- Exams, interviews or assessments prior to and immediately after the training.

- Observations by peers and instructors

- Strategies for assessment should be relevant to the goals of the training program.

- A distinct clear scoring process needs to be determined in order to reduce the possibility of inconsistent evaluation reports.

- Interview, printed, or electronic type examinations can be carried out.

- An interview can be carried out before and after the assessment, though this is time-consuming and unreliable.

Level 3 Evaluation – Transfer

Was the leaning being applied by the attendees?

This level analyzes the differences in the participant’s behavior at work after completing the program. Assessing the change makes it possible to figure out if the knowledge, mindset, or skills the program taught are being used the workplace.

For the majority of individuals this level offers the truest evaluation of a program’s usefulness. Having said that, testing at this level is challenging since it is generally impossible to anticipate when a person will start to properly utilize what they’ve learned from the program, making it more difficult to determine when, how often, and exactly how to evaluate a participant post-assessment.

This level starts 3–6 months after training.

Examples of assessment resources and techniques for level three of learning –

- This can be carried out through observations and interviews.

- Evaluations have to be subtle until change is noticeable, after which a more thorough examination tool can be used.

- Understanding where the learned knowledge and gained skills used?

- Surveys and close observation after some time are necessary to evaluate significant change, importance of change, and how long this change will last.

- Online evaluations tend to be more challenging to integrate. Examinations are usually more successful when incorporated within present management and training methods at the participant’s workplace.

- Quick examinations done immediately following the program are not going to be reliable since individuals change in various ways at different times.

- 360-degree feedback is a tool that many businesses use, but is not necessary before starting the training program. It is much better utilized after training since participants will be able to figure out on their own what they need to do different. After changes have been observed over time then the individual’s performance can be reviewed by others for proper assessment.

- Assessments can be developed around applicable scenarios and distinct key efficiency indicators or requirements relevant to the participant’s job.

- Observations should be made to minimize opinion-based views of the interviewer as this factor is far too variable, which can affect consistency and dependability of assessments.

- Taking into consideration the opinion of the participant can also be too variable of a factor as it makes evaluation very unreliable, so it is essential that assessments focus more defined factors such as results at work rather than opinions.

- Self-assessment can be handy, but only with an extensively designed set of guidelines.

Level 4 Evaluation – Results

What are the final results of the training?

Commonly regarded as the primary goal of the program, level four determines the overall success of the training model by measuring factors such as lowered spending, higher returns on investments, and improved quality of products, less accident in the workplace, more efficient production times, and a higher quantity of sales.

From a business standpoint, the factors above are the main reason for the model, even so level four results are not usually considered. Figuring out whether or not the results of the training program can be linked to better finances is hard to accurately determine.

Types of assessment strategies and tools used for level four –

- It should be discussed with the participant exactly what is going to be measured throughout and after the training program so that they know what to expect and to fully grasp what is being assessed.

- Use a control group

- Allow enough time to measure / evaluate

- No final results can be found unless a positive change takes place.

- Improper observations and the inability to make a connection with training input type will make it harder to see how the training program has made a difference in the workplace.

- The process is to determine which methods and how these procedures are relevant to the participant’s feedback.

- For senior individuals in particular, yearly evaluations and regular arrangements of key business targets are essential in order to accurately evaluate business results that are because of the training program.

One of the key strengths of this model is in its simplicity, which was designed to allow it to be understood and used easily by HR practitioners when designing their evaluation tools.

CIRO Model

The CIRO model was developed by Warr, Bird and Rackham and published in 1970 in their book “Evaluation of Management Training”. CIRO stands for context, input, reaction and output. The key difference in CIRO and Kirkpatrick’s models is that CIRO focuses on measurements taken before and after the training has been carried out.

One criticism of this model is that it does not take into account behaviour. Some practitioners feel that it is, therefore, more suited to management focused training programmes rather than those designed for people working at lower levels in the organization.

- Context: This is about identifying and evaluating training needs based on collecting information about performance deficiencies and based on these, setting training objectives which may be at three levels:

- The ultimate objective: The particular organisational deficiency that the training program will eliminate.

- The intermediate objectives: The changes to the employees work behaviours necessary if the ultimate objective is to be achieved.

- The immediate objectives: The new knowledge, skills or attitudes that employees need to acquire in order to change their behaviour and so achieve the intermediate objectives.

- Input: This is about analysing the effectiveness of the training courses in terms of their design, planning, management and delivery. It also involves analysing the organisational resources available and determining how these can be best used to achieve the desired objectives.

- Reaction: This is about analysing the reactions of the delegates to the training in order to make improvements. This evaluation is obviously subjective so needs to be collected in as systematic and objective way as possible.

- Outcome: Outcomes are evaluated in terms of what actually happened as a result of training. Outcomes are measured at any or all of the following four levels, depending on the purpose of the evaluation and on the resources that are available.

Kaufman’s Five Levels of Evaluation

Training is a vital component for any organization or business to be successful. Thorough evaluations are needed to determine the most effective training programs and how best to implement them. Kaufman’s Five Levels of Evaluation is one such method used to develop both initial and on-the-job training programs. Modeled after University of Wisconsin professor Donald Kirkpatrick’s four-level evaluation method, Roger Kaufman’s theory applies five levels. It is designed to evaluate a program from the trainee’s perspective and assess the possible impacts on the client and society that could result from implementing a new training program.

Level 1- Input and Process

The first level of Kaufman’s evaluation method is broken down into two parts. Level 1a is the “Enabling” evaluation, designed to evaluate the quality and availability of physical, financial and human resources. This is an input level. Level 1b, “Reaction,” evaluates the efficiency and acceptability of the means, methods and processes of the proposed training program. Test subjects are asked how they feel about the instruction.

Level 2 and 3 – Micro Levels

Levels 2 and 3 are classified as micro levels designed to evaluate individuals and small groups. Level 2, “Acquisition,” evaluates the competency and mastery of the test group/individual in a classroom setting. Level 3, “Application,” evaluates the success of the test group/individual’s utilization of the training program. Test subjects are monitored to determine how much and how well they implement the knowledge they gained within the organization.

Level 4 – Macro Level

“Organization Output” is level 4 in Kaufman’s method of evaluation. This level is designed to evaluate the results of the contributions and payoffs of the organization as a whole as a result of the proposed training program. Success is measured in terms of the organization’s overall performance and the return on investments.

Level 5 – Mega Level

In the final level of Kaufman’s method of evaluation, “Societal Outcomes,” the contributions to and from the client and society as a whole are evaluated. Responsiveness, potential consequences and payoffs are gauged to determine the success of implementing the proposed training program.

Anderson’s Model for Learning Evaluation

Anderson’s Value of Learning model outlines a three-stage learning evaluation cycle which is used for applying at the organization level, rather than for specific learning interventions.

According to Brandon Hall’s Learning & Development Benchmarking study, 73% of organizations have learning strategies that are highly aligned to business goals. Oftentimes, the expectations for training programs do not align with business goals.

Example – Suppose a manufacturing company knows they have limits with the output and quality of their products. If the training department creates a program to increase the productivity of their sales team, the training program might actually hurt the business if it increases sales. Customers would be left waiting as production levels struggled to keep up. Training was not delivered where it was needed—in manufacturing productivity.

The Value of Learning model emphasizes the importance of aligning the learning function with the organization’s strategic priorities. It focuses on evaluation of learning strategy, rather than individual programs.

This model provides a three-stage cycle to address the evaluation and value challenge,

- Determine current alignment against strategic priorities.

- Use a range of methods to assess and evaluate the contribution of learning.

- Establish the most relevant approaches for organization. What measures should I use?

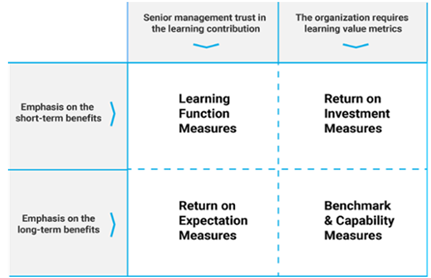

Depending on stakeholders’ values, Anderson’s model recommends evaluating different categories of measure. These categories are outlined in the following table –