The NodeManager is YARN’s per-node “worker” agent, taking care of the individual compute nodes in a Hadoop cluster. Its duties include keeping up-to-date with the ResourceManager, overseeing application containers’ life-cycle management, monitoring resource usage (memory, CPU) of individual containers, tracking node health, log management, and auxiliary services that may be exploited by different YARN applications.

On start-up, the NodeManager registers with the ResourceManager; it then sends heartbeats with its status and waits for instructions. Its primary goal is to manage application containers assigned to it by the ResourceManager.

YARN containers are described by a container launch context (CLC). This record includes a map of environment variables, dependencies stored in remotely accessible storage, security tokens, payloads for NodeManager services, and the command necessary to create the process. After validating the authenticity of the container lease, the NodeManager configures the environment for the container, including initializing its monitoring subsystem with the resource constraints’ specified application. The NodeManager also kills containers as directed by the ResourceManager.

Among the previously listed responsibilities, container management is the core responsibility of a NodeManager. From this point of view, the NodeManager accepts requests from ApplicationMasters to start and stop containers, authenticates container tokens (a security mechanism to make sure applications can appropriately use resources as given out by the ResourceManager), manages libraries that containers depend on for execution, and monitors containers’ execution. Operators configure each NodeManager with a certain amount of memory, number of CPUs, and other resources available at the node by way of configuration files ( yarn-default.xml and/or yarn-site.xml ). After registering with the ResourceManager, the NodeManager periodically sends a heartbeat with its current status and receives instructions, if any, from the ResourceManager. When the scheduler gets to process the node’s heartbeat (which can happen after a delay follows a node’s heartbeat), containers are allocated against that NodeManager and then are subsequently returned to the ApplicationMasters when the ApplicationMasters themselves send a heartbeat to the ResourceManager.

The NodeManager may also kill containers as directed by the ResourceManager. Containers may be killed if

- The ResourceManager sends a signal that an application has completed.

- The scheduler decides to preempt it for another application or user.

- The NodeManager detects that the container exceeded the resource limits as specified by its ContainerToken.

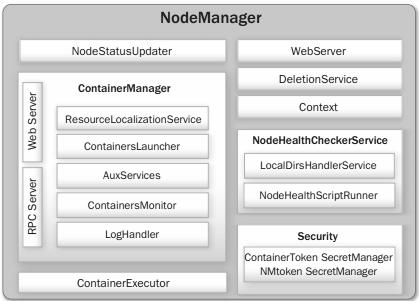

NodeManager has many components, as

ContainerManager – This component is the core of the NodeManager. It is composed of the following subcomponents, each of which performs a subset of the functionality that is needed to manage the containers running on the node.

- RPC Server – ContainerManager accepts requests from ApplicationMasters to start new containers, or to stop running ones. It works with NMToken SecretManager and Container Token SecretManager (described later) to authenticate and authorize all requests. All the operations performed on containers running on this node are recorded in an audit log, which can be postprocessed by security tools.

- Resource Localization Service – Resource localization is one of the important services offered by NodeManagers to user applications. Overall, the resource localization service is responsible for securely downloading and organizing various file resources needed by containers. It tries its best to distribute the files across all the available disks. It also enforces access control restrictions on the downloaded files and puts appropriate usage limits on them.

- Containers Launcher – The Containers Launcher maintains a pool of threads to prepare and launch containers as quickly as possible. It also cleans up the containers’ processes when the ResourceManager sends such a request through the NodeStatusUpdater or when the ApplicationMasters send requests via the RPC server. The launch or cleanup of a container happens in one thread of the thread pool, which will return only when the corresponding operation finishes. Consequently, launch or cleanup of one container doesn’t affect any other operations and all container operations are isolated inside the NodeManager process.

- Auxiliary Services – An administrator may configure the NodeManager with a set of pluggable, auxiliary services. The NodeManager provides a framework for extending its functionality by configuring these services. This feature allows per-node custom services that specific frameworks may require, yet places them in a local “sandbox” separate from the rest of the NodeManager. These services must be configured before the NodeManager starts. Auxiliary services are notified when an application’s first container starts on the node, whenever a container starts or finishes, and finally when the application is considered to be complete.

Container Executor – This NodeManager component interacts with the underlying operating system to securely place files and directories needed by containers and subsequently to launch and clean up processes corresponding to containers in a secure manner.

Node Health Checker Service – The NodeHealthCheckerService provides for checking the health of a node by running a configured script frequently. It also monitors the health of the disks by creating temporary files on the disks every so often. Any changes in the health of the system are sent to NodeStatusUpdater (described earlier), which in turn passes the information to the ResourceManager.